1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

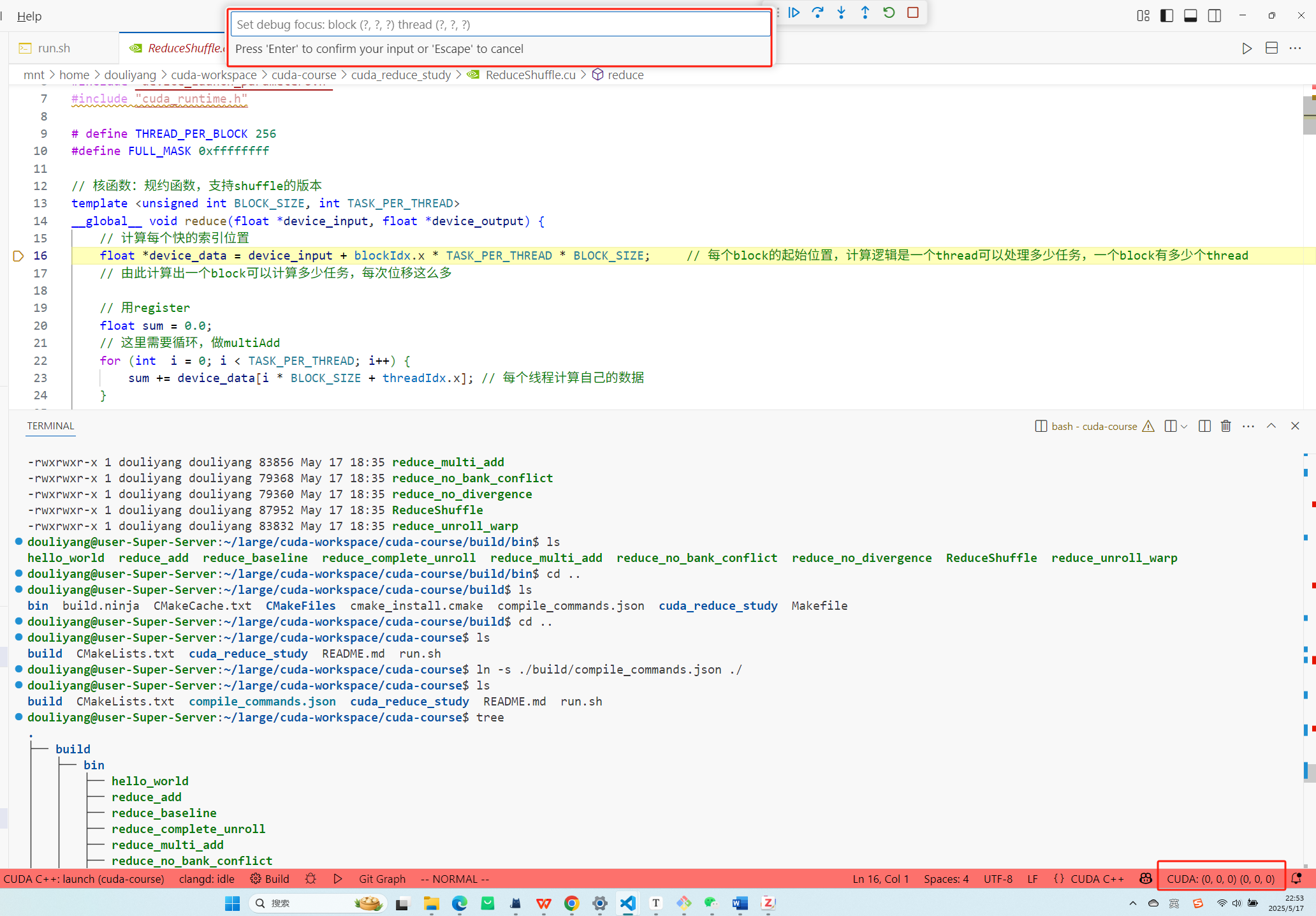

| #include <bits/stdc++.h>

#include <cstdlib>

#include <cuda.h>

#include <cuda_runtime_api.h>

#include <sys/time.h>

#include "device_launch_parameters.h"

#include "cuda_runtime.h"

# define THREAD_PER_BLOCK 256

#define FULL_MASK 0xffffffff

template <unsigned int BLOCK_SIZE, int TASK_PER_THREAD>

__global__ void reduce(float *device_input, float *device_output) {

float *device_data = device_input + blockIdx.x * TASK_PER_THREAD * BLOCK_SIZE;

float sum = 0.0;

for (int i = 0; i < TASK_PER_THREAD; i++) {

sum += device_data[i * BLOCK_SIZE + threadIdx.x];

}

for (int offset = 16; offset > 0; offset /= 2) {

sum += __shfl_down_sync(FULL_MASK, sum, offset);

}

__shared__ float shared_data[32];

unsigned int lane_id = threadIdx.x % warpSize;

unsigned int warp_id = threadIdx.x / warpSize;

if (lane_id == 0) {

shared_data[warp_id] = sum;

}

__syncthreads();

if (threadIdx.x == 0) {

device_output[blockIdx.x] = sum;

}

if (warp_id == 0) {

sum = (lane_id < (blockDim.x / 32)) ? shared_data[lane_id] : 0.0;

for (int offset = 16; offset > 0; offset /= 2) {

sum += __shfl_down_sync(FULL_MASK, sum, offset);

}

}

if (threadIdx.x == 0) {

device_output[blockIdx.x] = sum;

}

}

bool check(float *out, float *res, int n) {

for (int i = 0; i < n; i++) {

if (fabs(out[i] - res[i]) > 0.05) {

printf("Error: out[%d] = %f, res[%d] = %f\n", i, out[i], i, res[i]);

return false;

}

}

return true;

}

int main() {

const int N = 32 * 1024 * 1024;

float *host_input = (float *)malloc(N * sizeof(float));

float *device_input;

cudaMalloc((void **)&device_input, N * sizeof(float));

constexpr int block_num = 1024;

constexpr int task_per_block = N / block_num;

constexpr int task_per_thread = task_per_block / THREAD_PER_BLOCK;

float *host_output = (float *)malloc(block_num * sizeof(float));

float *device_output;

cudaMalloc((void **)&device_output, block_num * sizeof(float));

float *res = (float *)malloc(block_num * sizeof(float));

for (int i = 0; i < N; i++) {

host_input[i] = 2.0 * (float)drand48() - 1.0;

}

for (int i = 0; i < block_num; i++) {

res[i] = 0.0;

for (int j = 0; j < task_per_block; j++) {

res[i] += host_input[i * task_per_block + j];

}

}

cudaMemcpy(device_input, host_input, N * sizeof(float), cudaMemcpyHostToDevice);

dim3 Grid(block_num, 1);

dim3 Block(THREAD_PER_BLOCK, 1);

reduce<THREAD_PER_BLOCK, task_per_thread><<<Grid, Block>>>(device_input, device_output);

cudaMemcpy(host_output, device_output, block_num * sizeof(float), cudaMemcpyDeviceToHost);

if (check(res, host_output, block_num)) {

printf("================== Reduce Shuffle ==================\n");

printf("Result is correct!\n");

} else {

printf("================== Reduce Shuffle ==================\n");

printf("Result is incorrect!\n");

}

cudaFree(device_input);

cudaFree(device_output);

free(host_input);

free(host_output);

free(res);

return 0;

}

|